XR Interaction Design Intern

Time Line

February - December 2023

Tools

Blender, Unity, Stable Diffusion, After Effect, Mapbox, Rokoko, XREAL

I joined the BMW Innovation lab located in Munich, Germany, worked on shaping the future vision of vehicle experience.

As part of a design-driven researching team, we explored how XR as a medium can enhance communication between the vehicle and its passengers, to create a more cozy and immersive in-car experience.

I contributed my navigation design to BMW team,

presented in CES 2024, Las Vegas

presented in CES 2024, Las Vegas

Information matters. Beyond HUD

As technology rapidly evolves, our Innovation Lab in 2023 is committed to furthering BMW's pioneering legacy. Reflecting on these historical milestones and analyzing current industry developments, we pose the question:

How can augmented reality further enhance and redefine the in-car experience?

Spatial computation for in-car experience

It all began with a single question:

How might we use AR to rethink what's possible inside the car?

What if we can place virtual content in the real-world environment?

In this way, AR glasses can do more...

- Large movable field of view

- Great extension to a regular head-up display

- Entertainment for the passenger (Immersive experiences, Enhanced gaming, 3D videos)

- Improve safety for the driver (Display driving-relevant content where you need itKeep eyes on the road

Advantages of BMW AR Glasses over in-car displays

Display Content where you want

- AR glasses have a huge field of view compared to in-car displays. Content can be placed anywhere in the environment.

Personalized content for everyone

-

Customized content for everyone in the car, transforming every ride into the most unique experience.

Immersive riding experience

-

Virtual elements blend seamlessly with the real world to create fully immersive experiences.

Competitive Analysis (2023):

Recognizing these shortcomings as critical for meaningful AR passenger interactions, our efforts focus on overcoming these limitations to unlock the full potential of AR-enhanced in-car experiences.

Persona Context: CES in Las Vegas

Functions to bring to live

To explore these possibilities, we generated a wide range of ideas through brainstorming and mapped them using an impact-effort matrix. Each concept was positioned based on user impact and ease of implementation, then categorized into three themes: Vehicle Control Integration, Environmental Awareness, and Entertainment-focused. This method helped us identify high-value, feasible directions to prioritize in the design process.

AR Ride Use Cases Exploration

Spatial Navigation

- AR glasses provide real-time, on-road navigation overlays, ensuring drivers keep their eyes on the road.

- Key route guidance, lane change prompts, and turn-by-turn directions are seamlessly integrated into the driver's field of view.

Signs & Hazard Warnings

- AR-enhanced hazard detection alerts drivers about pedestrians, cyclists, and unexpected obstacles.

- Real-time traffic sign recognition displays speed limits, stop signs, and warnings directly in the driver’s line of sight.

- Adaptive alerts for potential collisions, blind-spot monitoring, and lane departure warnings.

Parking

- Visualized parking distance alerts helps drivers park precisely with augmented parking guidelines.

- 360-degree AR overlays enhance spatial awareness by integrating vehicle cameras and sensors.

Entertainment

-

AR glasses offer immersive media experiences, allowing passengers to watch movies, play AR-enhanced games, or browse interactive content.

- Multi-screen capability enables personalized entertainment without interfering with the driver’s focus.

Image resource: https://wayray.com/deep-reality-displa

BMW + XReal: AR Platform

Input Methods Exploration

Looking ahead, we see strong potential in more ambient, low-effort input methods—such as voice control and touch-sensitive fabric panels—tailored to the dynamic in-car context.

Giving Form to the Invisible

I designed a comprehensive set of simplified, modern visual elements tailored to AR glasses' see-through nature. The goal was to maintain legibility and spatial clarity in various lighting conditions, improve navigational awareness and safety.

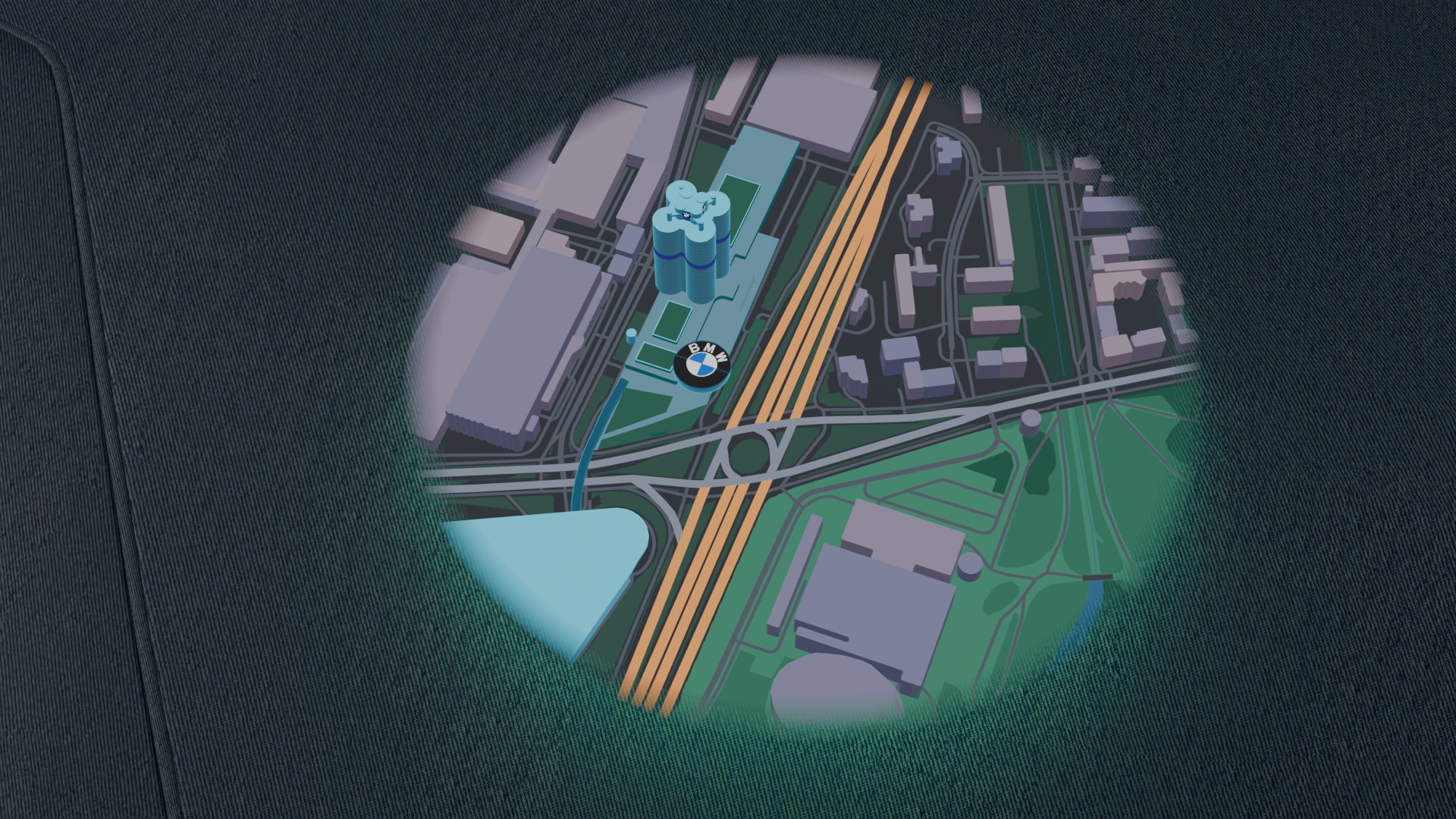

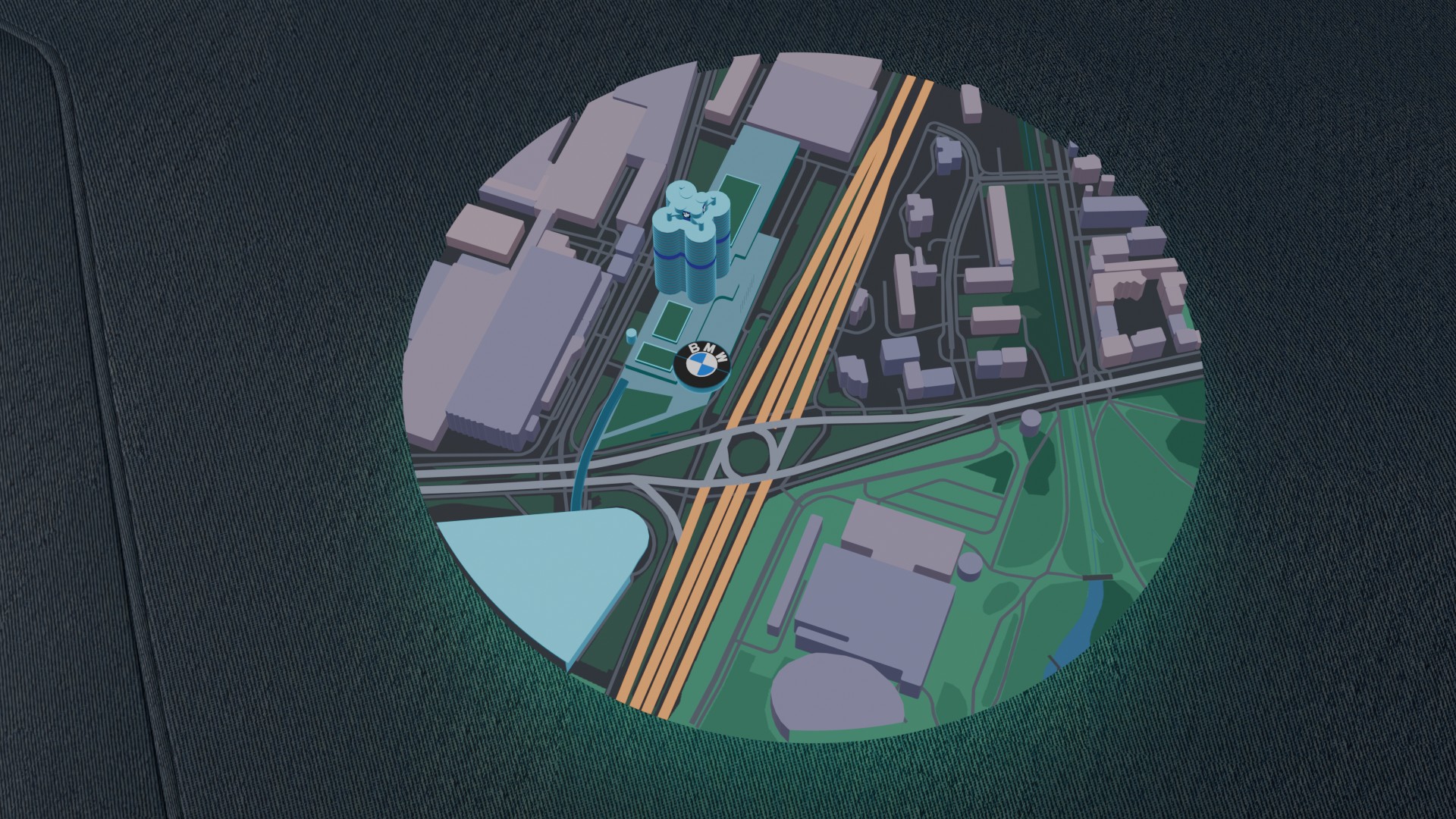

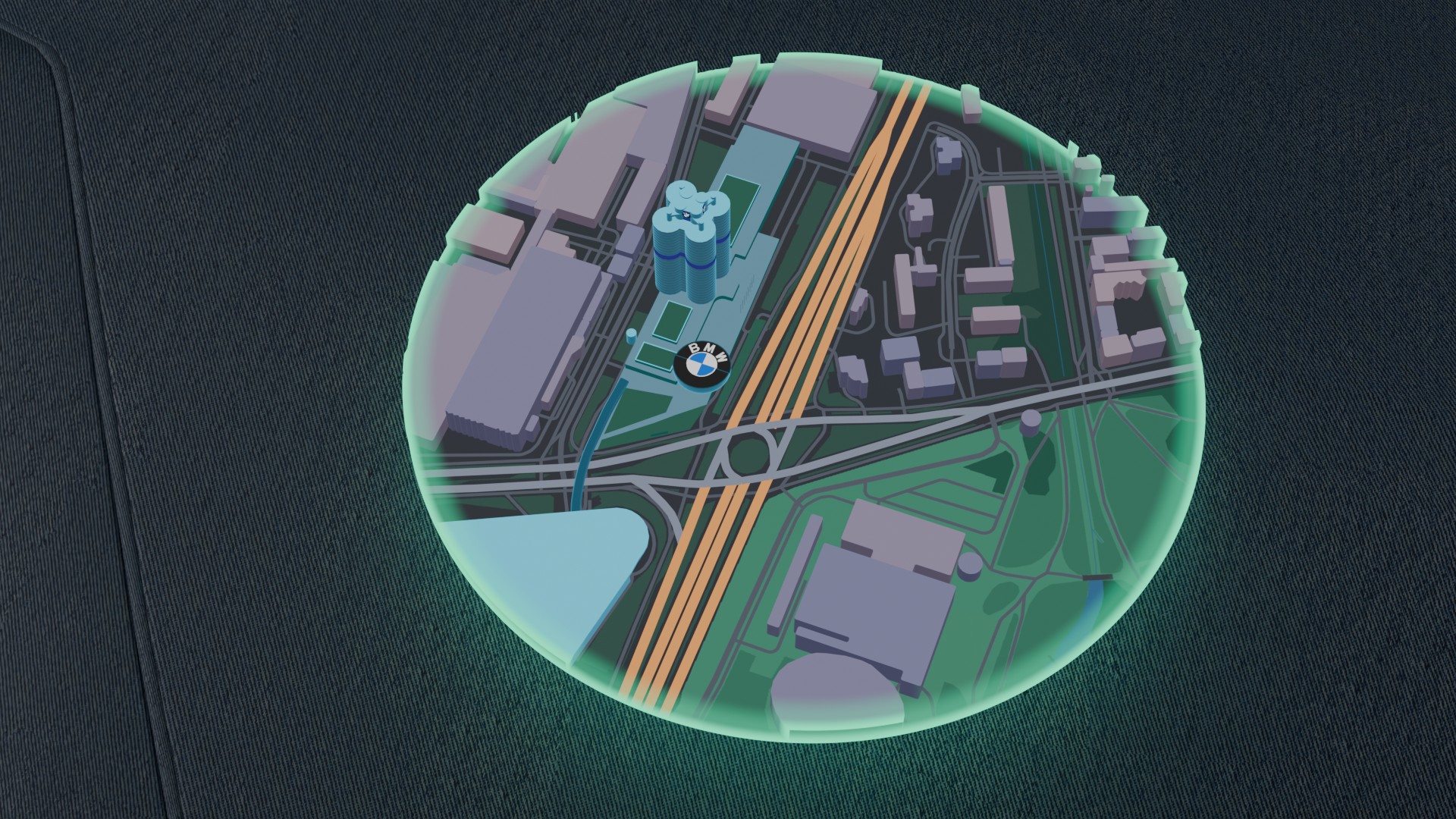

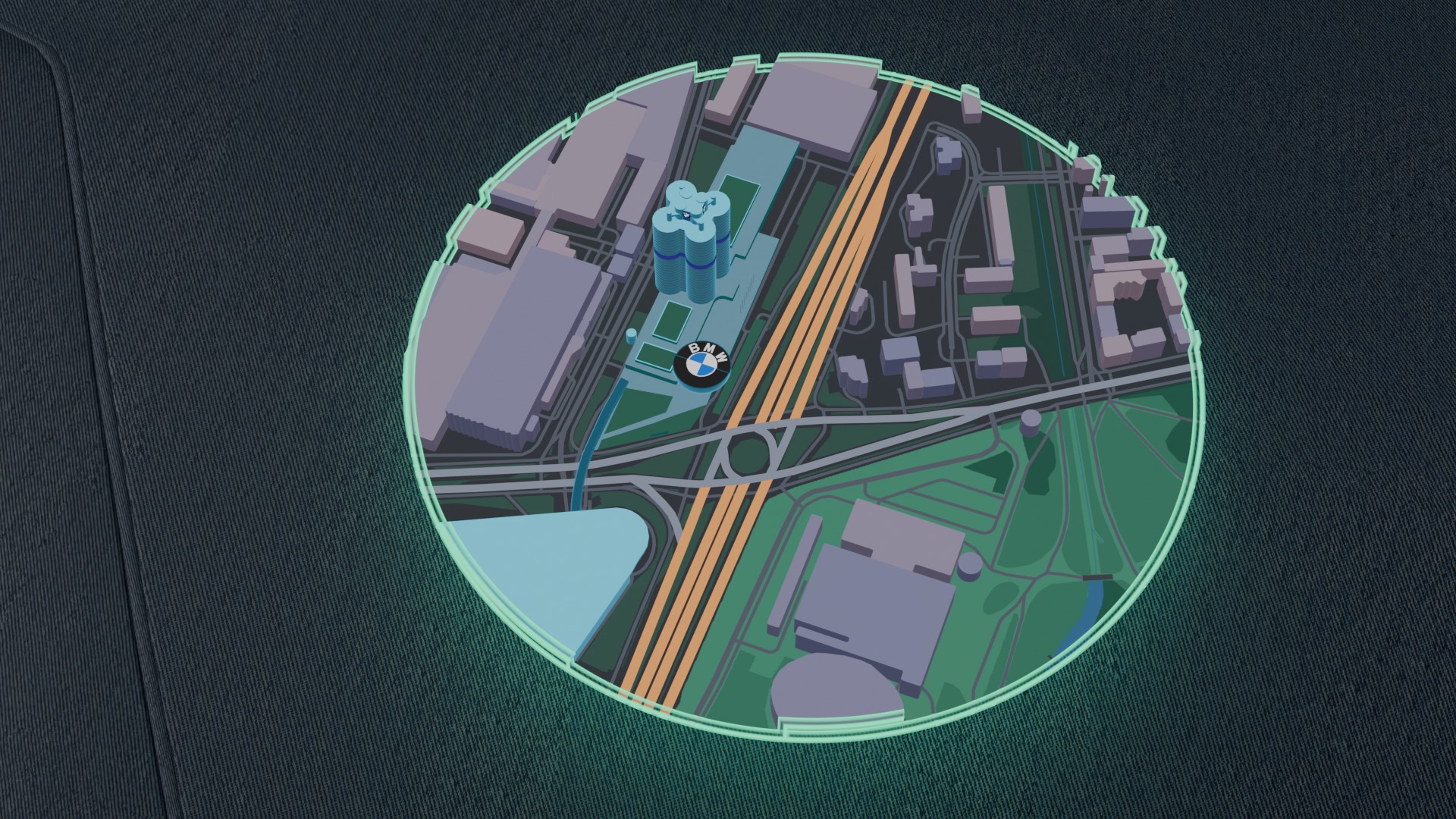

Map System: Day & Night Visualization

Map Periphery

Procedural LOD for Spatial Hierarchy

Prototype

From Design to Runtime

System Interface Diagram

Viusal styling

These shaders allowed us to control the look and feel of map boundaries and spatial transitions through dynamic animation and layered effects—informing many of our later aesthetic and interaction decisions.

I created a JSON-based visual stylesheet to systematically manage color, path weight, and edge behavior across map elements.

This approach not only ensured a consistent visual language throughout the interface, but also made the system significantly easier to maintain, customize, and extend as the project evolved.

{

"BMW_Landmark_Building": {

"baseColor": "#58B4D4",

"roofColor": "#2C8AAE",

"emissiveHighlight": "#8ADFFF",

"cornerRadius": 0.35,

"material": {

"useFresnel": true,

"fresnelColor": "#A3ECFF",

"fresnelPower": 3.0,

"fresnelOpacity": 0.4,

"useAO": true,

"aoIntensity": 0.3,

"aoMap": "AO_GenericSoft"

},

"outline": {

"enabled": false,

"color": "#B0F5FF",

"thickness": 0.15

},

"animation": {

"entranceFadeIn": true,

"hoverPulse": true

}

}

}